This is how an ORC file can be read using PySpark. Let us now check the dataframe we created by reading the ORC file "users_orc.orc". Learn to Transform your data pipeline with Azure Data Factory! Read the ORC file into a dataframe (here, "df") using the code ("users_orc.orc). The ORC file "users_orc.orc" used in this recipe is as below. JellyBook Announcing JellyBook version 1.1.7. Hadoop fs -ls <full path to the location of file in HDFS> Make sure that the file is present in the HDFS. Step 3: We demonstrated this recipe using the "users_orc.orc" file. We provide appName as "demo," and the master program is set as "local" in this recipe. The same principle applies for ORC, text file, and JSON storage formats. You can name your application and master program at this step. are compressed with Snappy and other Parquet files are compressed with GZIP. This means that if data is loaded into Big SQL using either the LOAD HADOOP or. Step 2: Import the Spark session and initialize it. By default Big SQL will use SNAPPY compression when writing into Parquet tables.

ORC SNAPPY COMPRESSION FULL

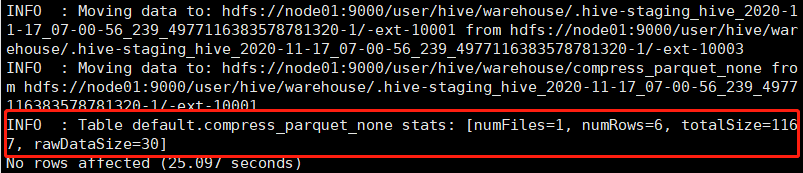

Provide the full path where these are stored in your instance. Please note that these paths may vary in one's EC2 instance. I noticed that it took more loading time than usual I believe thats because of enabling the compression. Step 1: Setup the environment variables for Pyspark, Java, Spark, and python library. Now I have created a duplicate table with ORC - SNAPPY compression and inserted the data from old table into the duplicate table.

It is reliable and has quite efficient encoding schemes and compression options. ORC format is a compressed data format reusable by various applications in big data environments.

ORC SNAPPY COMPRESSION HOW TO

In this recipe, we learn how to read an ORC file using PySpark. Internal compression name is usually added to a file name before file format extension, for example: file1.gz.parquet,, etc. Recipe Objective: How to read an ORC file using PySpark? Not to be confused with internal (chunk level) compression codec used by Parquet, AVRO and ORC formats.

0 kommentar(er)

0 kommentar(er)